Introduction

This section describes methods to rigorously assess and improve the curriculum. These methods are used by the Preventing Crashes leadership for ongoing program development, and may also be applied by organizations implementing the program. These methods may be used to:

- validate the commitment of time and money

- provide insight into suggestions for program refinement or growth

- support advocacy for resources for further change or implementation

Action Research

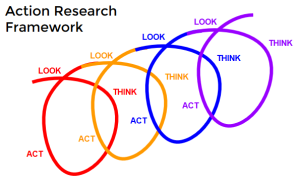

Akin to quality improvement (QI), action research (AR) uses progressive cycles of data gathering, analysis, and action to provide a systematic foundation for ongoing implementation and change, when needed.

There are two main differences between AR and QI. The first is the nature of the people involved; with AR, researchers tend to be involved in the longer term, with contributions to iterative cycles.

There are two main differences between AR and QI. The first is the nature of the people involved; with AR, researchers tend to be involved in the longer term, with contributions to iterative cycles.

The second is the format of the cycle. QI uses a four-step Plan-Do-Study-Act (PDSA) model, while one form of action research uses a three-step Look-Think-Act (Stringer, 2007), as described below.

Look

Relevant questions should be posed by leaders interested in validating and improving the program.

Questions may be asked in a variety of formats, including:

Observation

Focus Groups

|

Surveys

|

Data gathering may be easily incorporated into training sessions, but time needed should be kept to a minimum. Learners and facilitators should also be assured that data gathering is meant to build and sustain the program.

Data may be entered onto paper or in an electronic format, depending on local context and analysis structure. It is important to maintain data security, in regards to privacy and risk of loss or theft.

Think

A common group of researchers should be involved in data analysis over time, comprised of organizational leaders. Learner or facilitator representatives may also be involved in initial analysis, or in reviewing the data findings or themes. Analysis may take different forms, based on the questions asked.

Quantitative description may include number of participants, time or money needed, or test scores may be described in tables or charts using simple statistics. A more in-depth exploration of participants’ experience may be done using qualitative methods. Complex frameworks such as grounded theory or phenomenology may be considered, but a simpler yet effective methodology termed qualitative description may also be very helpful for the clinical context.

Qualitative description focuses on provided a systematic, yet straightforward summary of data. This may be organized into themes that emerge, and if a set of coding templates are used beforehand, they may be modified or even discarded as new data are generated (Sandelowski, 2000). This analysis should remain close to the surface of the words used by participants, with descriptive quotes included. Some exploration of the meaning behind the data is appropriate, but interpretation is more superficial than the other models described above. Interpretation tends to rest in a description of perceptions, attitudes, or feelings.

Importantly, suggestions for improvement should be carefully catalogued.

Act

It is up to researchers, leaders, and participants to determine a framework to guide decision making for program improvement. A simple, low-cost suggestion with widespread endorsement should be implemented, but change tends to be more complex if suggestions have varied support, or if significant resources are needed.

Suggestions with varied support

If there is significant disagreement on the merits of a proposed change, the decision should be made using voting or consensus by program leaders. This decision should be made with transparency, with the process and results shared with those affected.

Suggestions if significant resources are needed

If a proposed change is deemed by leaders to require significant investment of time or finances, it should be weighed according to the expected benefit. These decisions can be difficult, though may be supported using a number of strategies:

- the testing of a small change through subsequent AR cycles, before wider implementation

- the engagement of supportive learners or facilitators to offset time or financial costs

Subsequent cycles should occur until the program is operating smoothly, at which point their frequency may be decreased. Data gathering may continue during sessions, but with data analysis, using accumulated data, occurring on an as-needed basis, but at least yearly.

Resources and References

Stringer E. 2007. Action Research (3rd ed.). Thousand Oaks, California: Sage Publications.